Introduction

- Making A Web Scraper In Python Programming

- Making A Web Scraper In Python Programming

- Making A Web Scraper In Python Pdf

- Python Web Scraper Gui Tutorial

- Online Web Scraper

We'll cover how to use Headless Chrome for web scraping Google Places. Google places does not necessarily require javascript because google will serve a different response if you disable javascript. But for better user emulation when browsing/scraping google places, a browser is recommended.

Headless Chrome is essentially the Chrome browser running without a head (no graphical user interface). The benefit being you can run a headless browser on a server environment that also has no graphical interface attached to it, which is normally accessed through shell access. It can also be faster to run headless and can have lower overhead on system resources.

Controlling a browser

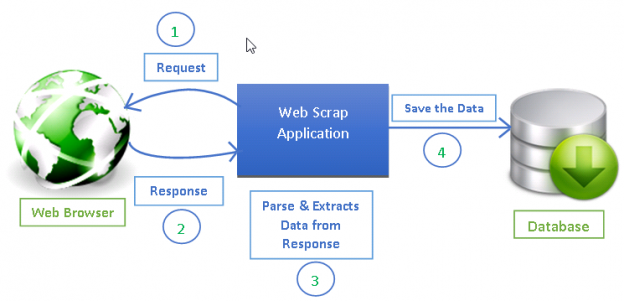

- The popular programming language Python is a great tool for creating web scraping software. Since websites are constantly being modified, web content changes over time. For example, the website’s design may be modified or new page components may be added.

- Create Your Own Web Scraper. Now you know why web scrapers and Python are cool. Next, we will be going through the steps to creating our web scraper. Choose the page you want to scrape. In this example, we will scrape Footshop for some nice sneaker models and their prices. Then, we’ll store the data in CSV format for further use.

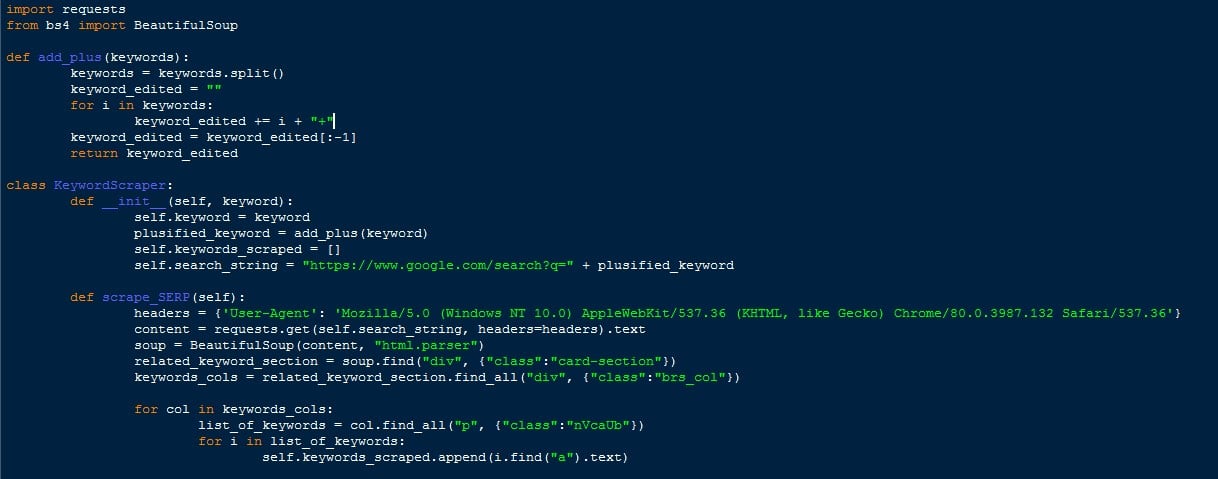

Mar 18, 2021 Building a web scraper: Python prepwork Throughout this entire web scraping tutorial, Python 3.4+ version will be used. Specifically, we used 3.8.3 but any 3.4+ version should work just fine. For Windows installations, when installing Python make sure to check “PATH installation”. We’ll be expanding on our scheduled web scraper by integrating it into a Django web app. Part 1, Building an RSS feed scraper with Python, illustrated how we can use Requests and Beautiful Soup. In part 2 of this series, Automated web scraping with Python and Celery, I demonstrated how to schedule web scraping tasks with Celery, a task queue. An easy way to scrape data using Python is using a package or library called Beautiful Soup. Let’s do a pip install and import it like so:!pip install beautifulsoup4 from bs4 import BeautifulSoup.

We need a way to control the browser with code, this can be done through what is called the Chrome DevTools Protocol or CDP. CDP is essentially a websocket server running on the browser that is based on JSONRPC. Instead of directly working with CDP we'll use a library called pyppeteer which is a python implementation of the CDP protocol that provides an easier to use abstraction. It's inspired by the Node version of the same library called puppeteer.

Setting up

As usual with any of my python projects, I recommend working in a virtual python environment which helps us address dependencies and versions separately for each application / project. Let's create a virtual environment in our home directory and install the dependencies we need.

Make sure you are running at least python 3.6.1, 3.5 is end of support.The pyppeteer library will not work with python 3.6.0, this is due to the websockets library that it depends on not supporting that python version.

Let's create the following folders and files.

We created a __main__.py file, this lets us run the Google Places scraper with the following command (nothing should happen right now):

Launching a headless browser

We need to launch a Chrome browser. By default, pyppeteer will install the latest version of Chromium. It's also possible to just use Chrome as long as it is installed on your system. The library makes use of async/await for concurrency. In order to use this we import the asyncio package from python.

To launch with Chrome instead of Chromium add executablePath option to the launch function. Below, we launch the browser, navigate to google and take a screenshot. The screenshot will be saved in the folder you are running the scraper.

Digging in

Let's create some functions in core/browser.py to simplify working with a browser and the page. We'll make use of what I believe is an awesome feature in python for simplifying management of resources called context manager. Specifically we will use an async context manager.

An asynchronous context manager is a context manager that is able to suspend execution in its enter and exit methods.

This feature in python lets us write code like the below which handles opening and closing a browser with one line.

Let's add the PageSession async context manager in the file core/browser.py.

In our google-places/__main__.py file let's make use of our new PageSession and print the html content of the final rendered page with javascript executed.

Run the google-places module in your terminal with the same command we used earlier.

So now we can launch a browser, open a page (a tab in chrome) and navigate to a website and wait for javascript to finish loading/executing then close the browser with the above code.

Next let's do the following:

- We want to visit

google.com - Enter a search query for

pediatrician near 94118 - Click on google places to see more results

- Scrape results from the page

- Save results to a CSV file

Navigating pages

We want to end up on the following page navigations so we can pull the data we need.

Let's start by breaking up our code in google-places/__main__.py so we can first search then navigate to google places. We also want to clean up some of the string literals like the google url.

You can see the new code we added above as it has been highlighted. We use XPath to find the search bar, the search button and the view all button to get us to google places.

- Type in the search bar

- Click the search button

- Wait for the view all button to appear

- Click view all button to take us to google places

- Wait for an element on the new page to appear

Scraping the data with Pyppeteer

At this point we should be on the google places page and we can pull the data we want. The navigation flow we followed before is important for emulating a user.

Let's define the data we want to pull from the page.

- Name

- Location

- Phone

- Rating

- Website Link

In core/browser.py let's add two methods to our PageSession to help us grab the text and an attribute (the website link for the doctor).

So we added get_text and get_link. These two methods will evaluate javascript on the browser, the same way if you were to type it on the Chrome console. You can see that they just use the DOM to grab the text of the element or the href attribute.

In google-places/__main__.py we will add a few functions that will grab the content that we care about from the page.

We make use of XPath to grab the elements. You can practice XPath in your Chrome browser by pressing F12 or right-clicking inspect to open the console.Why do I use XPath? It's easier to specify complex selectors because XPath has built in functions for handling things like finding elements which contain some text or traversing the tree in various ways.

Making A Web Scraper In Python Programming

For the phone, rating and link fields we default to None and substitute with 'N/A' because not all doctors have a phone number listed, a rating or a link. All of them seem to have a location and a name.

Because there are many doctors listed on the page we want to find the parent element and loop over each match, then evaluate the XPath we defined above.To do this let's add two more functions to tie it all together.

The entry point here is scrape_doctors which evaluates get_doctor_details on each container element.

In the code below, we loop over each container element that matched our XPath and we get back a Future object by calling the function get_doctor_details.Because we don't use the await keyword, we get back a Future object which can be used by the asyncio.gather call to evaluate all Future objects in the tasks list.

This line allows us to wait for all async calls to finish concurrently.

Let's put this together in our main function. First we search and crawl to the right page, then we scrape with scrape_doctors.

Saving the output

In core/utils.py we'll add two functions to help us save our scraped output to a local CSV file.

Let's import it in google-places/__main__.py and save the output of scrape_doctors from our main function.

We should now have a file called pediatricians.csv which contains our output.

Wrapping up

From this guide we should have learned how to use a headless browser to crawl and scrape google places while emulating a real user.There's a lot more you can do with headless browsers such as generate pdfs, screenshots and other automation tasks.

Hopefully this guide helped you get started executing javascript and scraping with a headless browser. Till next time!

In the last tutorial we learned how to leverage the Scrapy framework to solve common web scraping problems.Today we are going to take a look at Selenium (with Python ❤️ ) in a step-by-step tutorial.

Selenium refers to a number of different open-source projects used for browser automation. It supports bindings for all major programming languages, including our favorite language: Python.

The Selenium API uses the WebDriver protocol to control a web browser, like Chrome, Firefox or Safari. The browser can run either localy or remotely.

At the beginning of the project (almost 20 years ago!) it was mostly used for cross-browser, end-to-end testing (acceptance tests).

Now it is still used for testing, but it is also used as a general browser automation platform. And of course, it us used for web scraping!

Selenium is useful when you have to perform an action on a website such as:

- Clicking on buttons

- Filling forms

- Scrolling

- Taking a screenshot

It is also useful for executing Javascript code. Let's say that you want to scrape a Single Page Application. Plus you haven't found an easy way to directly call the underlying APIs. In this case, Selenium might be what you need.

Installation

We will use Chrome in our example, so make sure you have it installed on your local machine:

seleniumpackage

To install the Selenium package, as always, I recommend that you create a virtual environment (for example using virtualenv) and then:

Quickstart

Once you have downloaded both Chrome and Chromedriver and installed the Selenium package, you should be ready to start the browser:

This will launch Chrome in headfull mode (like regular Chrome, which is controlled by your Python code).You should see a message stating that the browser is controlled by automated software.

To run Chrome in headless mode (without any graphical user interface), you can run it on a server. See the following example:

The driver.page_source will return the full page HTML code.

Here are two other interesting WebDriver properties:

driver.titlegets the page's titledriver.current_urlgets the current URL (this can be useful when there are redirections on the website and you need the final URL)

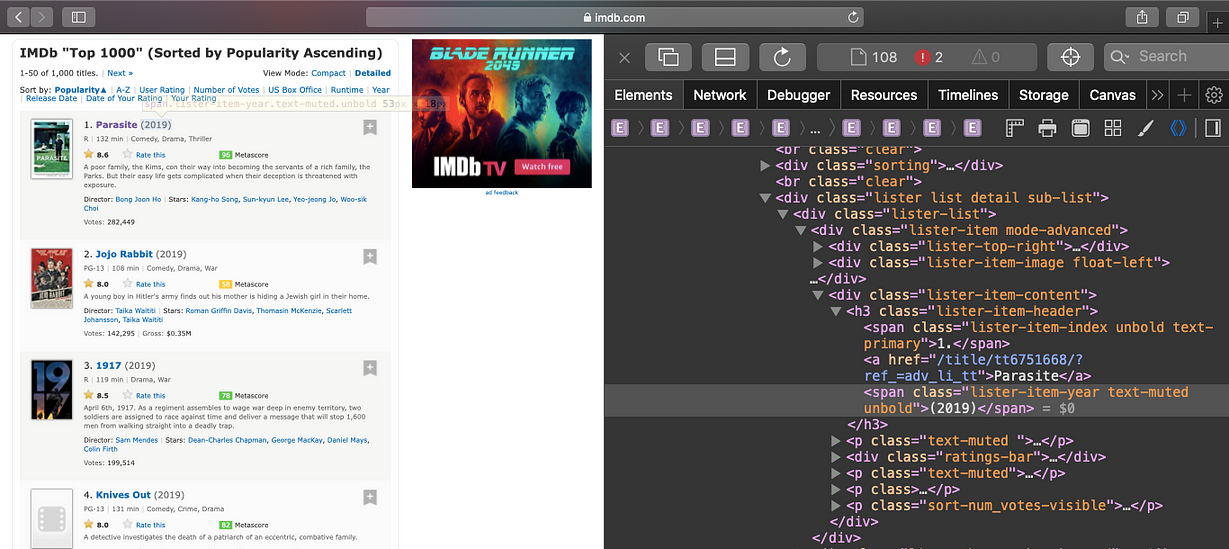

Locating Elements

Locating data on a website is one of the main use cases for Selenium, either for a test suite (making sure that a specific element is present/absent on the page) or to extract data and save it for further analysis (web scraping).

There are many methods available in the Selenium API to select elements on the page. You can use:

- Tag name

- Class name

- IDs

- XPath

- CSS selectors

We recently published an article explaining XPath. Don't hesitate to take a look if you aren't familiar with XPath.

As usual, the easiest way to locate an element is to open your Chrome dev tools and inspect the element that you need.A cool shortcut for this is to highlight the element you want with your mouse and then press Ctrl + Shift + C or on macOS Cmd + Shift + C instead of having to right click + inspect each time:

find_element

There are many ways to locate an element in selenium.Let's say that we want to locate the h1 tag in this HTML:

All these methods also have find_elements (note the plural) to return a list of elements.

For example, to get all anchors on a page, use the following:

Some elements aren't easily accessible with an ID or a simple class, and that's when you need an XPath expression. You also might have multiple elements with the same class (the ID is supposed to be unique).

XPath is my favorite way of locating elements on a web page. It's a powerful way to extract any element on a page, based on it's absolute position on the DOM, or relative to another element.

WebElement

A WebElement is a Selenium object representing an HTML element.

There are many actions that you can perform on those HTML elements, here are the most useful:

- Accessing the text of the element with the property

element.text - Clicking on the element with

element.click() - Accessing an attribute with

element.get_attribute('class') - Sending text to an input with:

element.send_keys('mypassword')

There are some other interesting methods like is_displayed(). This returns True if an element is visible to the user.

It can be interesting to avoid honeypots (like filling hidden inputs).

Honeypots are mechanisms used by website owners to detect bots. For example, if an HTML input has the attribute type=hidden like this:

This input value is supposed to be blank. If a bot is visiting a page and fills all of the inputs on a form with random value, it will also fill the hidden input. A legitimate user would never fill the hidden input value, because it is not rendered by the browser.

That's a classic honeypot.

Full example

Here is a full example using Selenium API methods we just covered.

We are going to log into Hacker News:

In our example, authenticating to Hacker News is not really useful on its own. However, you could imagine creating a bot to automatically post a link to your latest blog post.

In order to authenticate we need to:

- Go to the login page using

driver.get() - Select the username input using

driver.find_element_by_*and thenelement.send_keys()to send text to the input - Follow the same process with the password input

- Click on the login button using

element.click()

Should be easy right? Let's see the code:

Easy, right? Now there is one important thing that is missing here. How do we know if we are logged in?

We could try a couple of things:

- Check for an error message (like “Wrong password”)

- Check for one element on the page that is only displayed once logged in.

So, we're going to check for the logout button. The logout button has the ID “logout” (easy)!

We can't just check if the element is None because all of the find_element_by_* raise an exception if the element is not found in the DOM.So we have to use a try/except block and catch the NoSuchElementException exception:

Taking a screenshot

We could easily take a screenshot using:

Note that a lot of things can go wrong when you take a screenshot with Selenium. First, you have to make sure that the window size is set correctly.Then, you need to make sure that every asynchronous HTTP call made by the frontend Javascript code has finished, and that the page is fully rendered.

In our Hacker News case it's simple and we don't have to worry about these issues.

Waiting for an element to be present

Dealing with a website that uses lots of Javascript to render its content can be tricky. These days, more and more sites are using frameworks like Angular, React and Vue.js for their front-end. These front-end frameworks are complicated to deal with because they fire a lot of AJAX calls.

If we had to worry about an asynchronous HTTP call (or many) to an API, there are two ways to solve this:

- Use a

time.sleep(ARBITRARY_TIME)before taking the screenshot. - Use a

WebDriverWaitobject.

Making A Web Scraper In Python Programming

If you use a time.sleep() you will probably use an arbitrary value. The problem is, you're either waiting for too long or not enough.Also the website can load slowly on your local wifi internet connection, but will be 10 times faster on your cloud server.With the WebDriverWait method you will wait the exact amount of time necessary for your element/data to be loaded.

This will wait five seconds for an element located by the ID “mySuperId” to be loaded.There are many other interesting expected conditions like:

element_to_be_clickabletext_to_be_present_in_elementelement_to_be_clickable

You can find more information about this in the Selenium documentation

Executing Javascript

Sometimes, you may need to execute some Javascript on the page. For example, let's say you want to take a screenshot of some information, but you first need to scroll a bit to see it.You can easily do this with Selenium:

Conclusion

I hope you enjoyed this blog post! You should now have a good understanding of how the Selenium API works in Python. If you want to know more about how to scrape the web with Python don't hesitate to take a look at our general Python web scraping guide.

Selenium is often necessary to extract data from websites using lots of Javascript. The problem is that running lots of Selenium/Headless Chrome instances at scale is hard. This is one of the things we solve with ScrapingBee, our web scraping API

Making A Web Scraper In Python Pdf

Selenium is also an excellent tool to automate almost anything on the web.

Python Web Scraper Gui Tutorial

If you perform repetitive tasks like filling forms or checking information behind a login form where the website doesn't have an API, it's maybe* a good idea to automate it with Selenium,just don't forget this xkcd:

Online Web Scraper